|

I am a first year Computer Science PhD student at Stanford University advised by Prof. Dorsa Sadigh. I completed my Master's degree in Computer Science (Machine Learning specialization) from Georgia Tech. During my Master's degree, I worked in Prof. Dhruv Batra's lab on solving embodied mobile manipulation tasks. At Georgia Tech, I also had the privilege of being advised by Prof. Judy Hoffman on the problems of visual domain adaptation and bias identification. Before coming to Georgia Tech, I earned my bachelor's degree in Computer Science and Engineering with a minor in Applied Statistics from IIT Bombay, where I worked on the problem of image inpainting under the supervision of Prof. Suyash Awate. Email / CV / Google Scholar / Twitter / Github |

|

|

|

|

|

(*=equal contribution) |

|

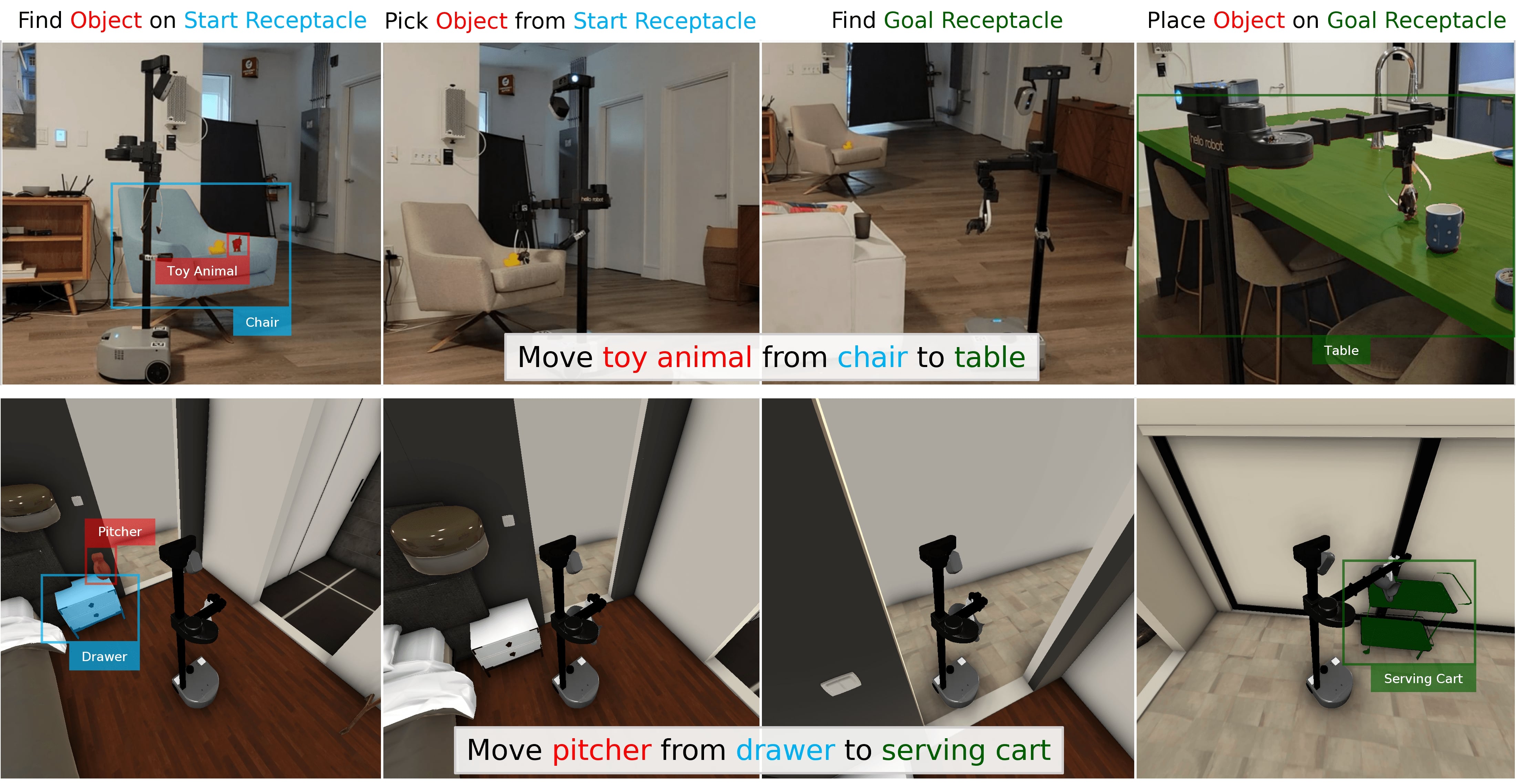

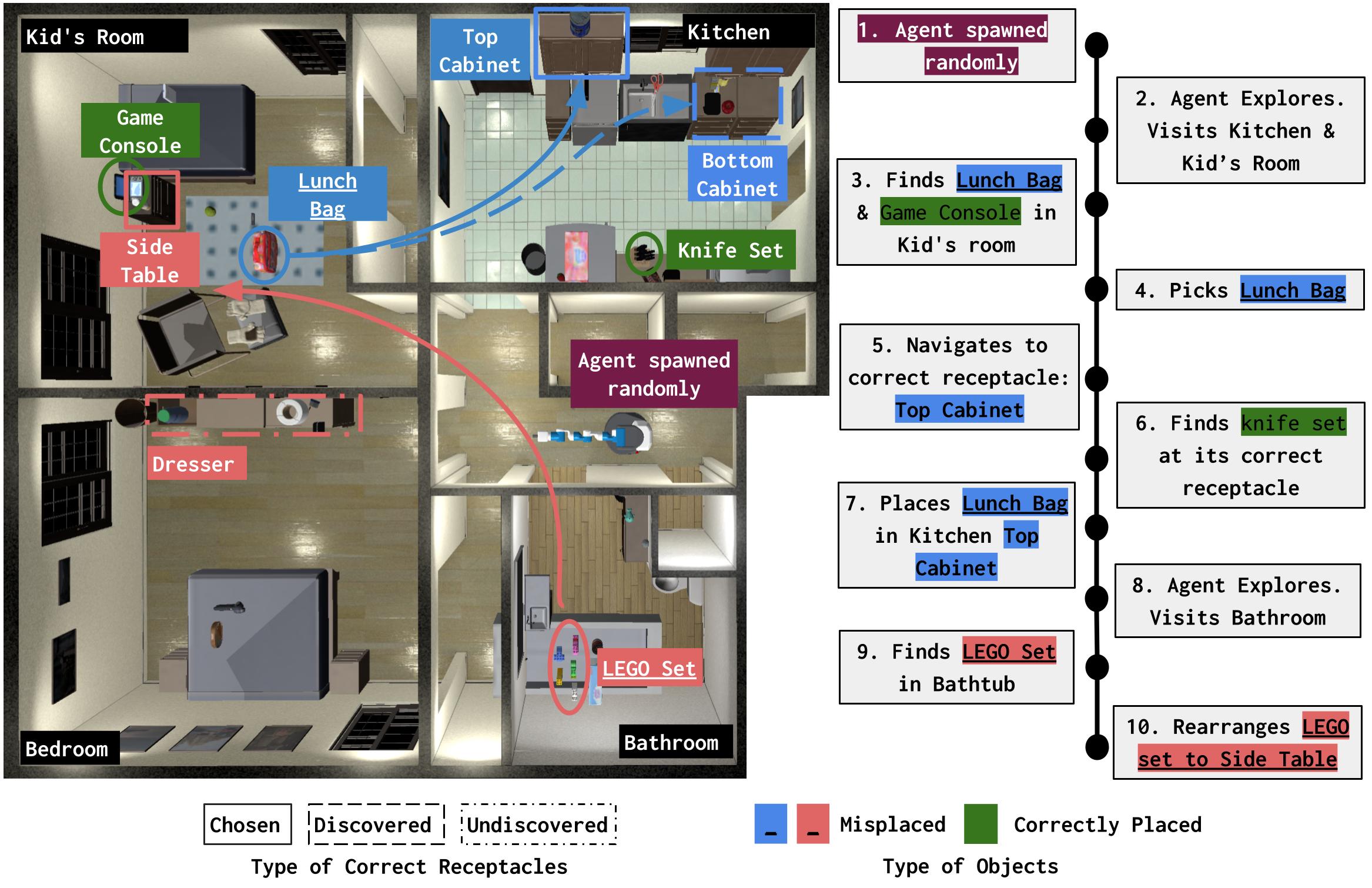

Matthew Chang*, Theophile Gervet*, Mukul Khanna*, Sriram Yenamandra*, Dhruv Shah, So Yeon Min, Kavit Shah, Chris Paxton, Saurabh Gupta, Dhruv Batra, Roozbeh Mottaghi, Jitendra Malik*, Devendra Singh Chaplot* RSS 2024 arXiv / code |

|

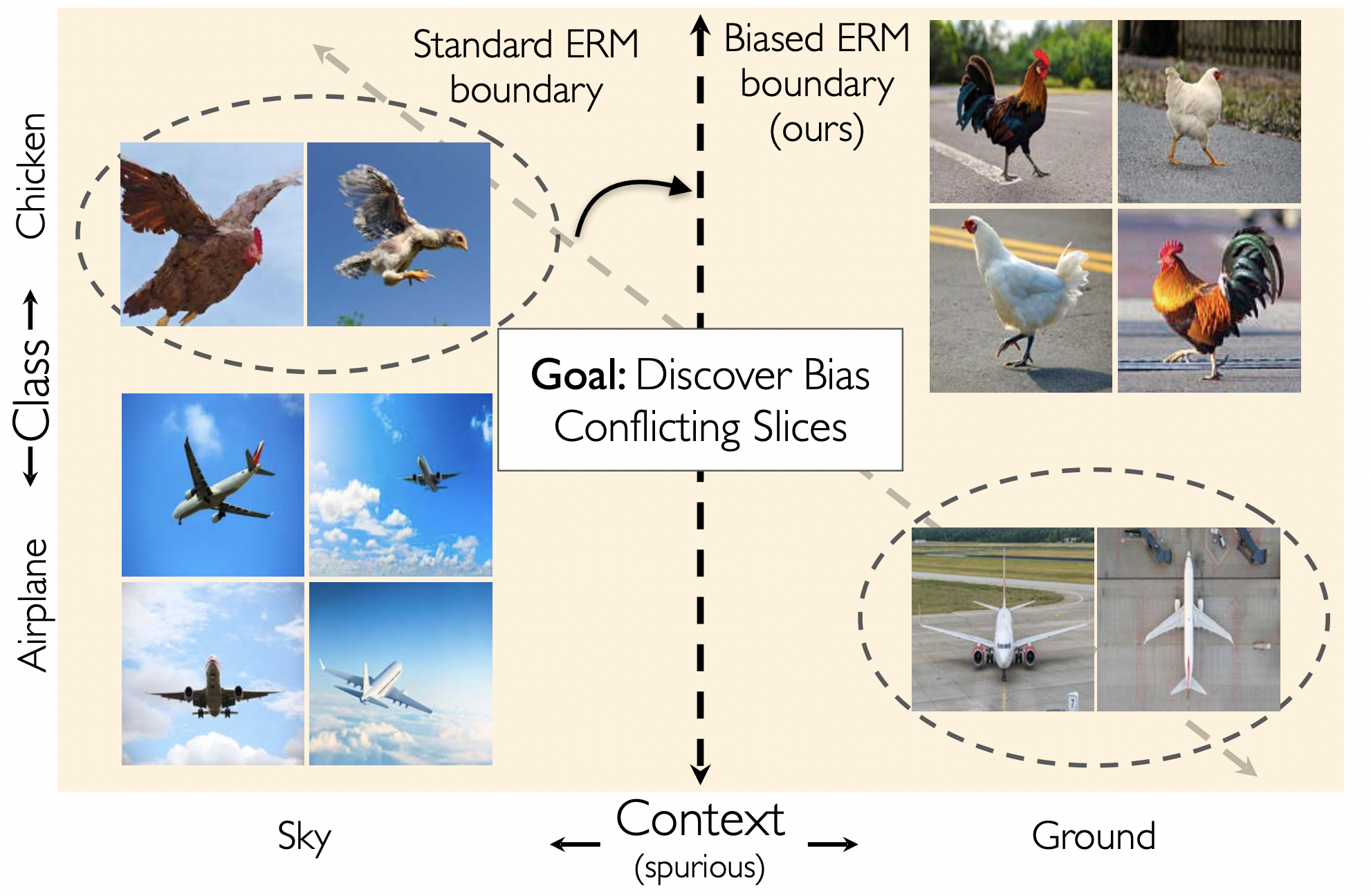

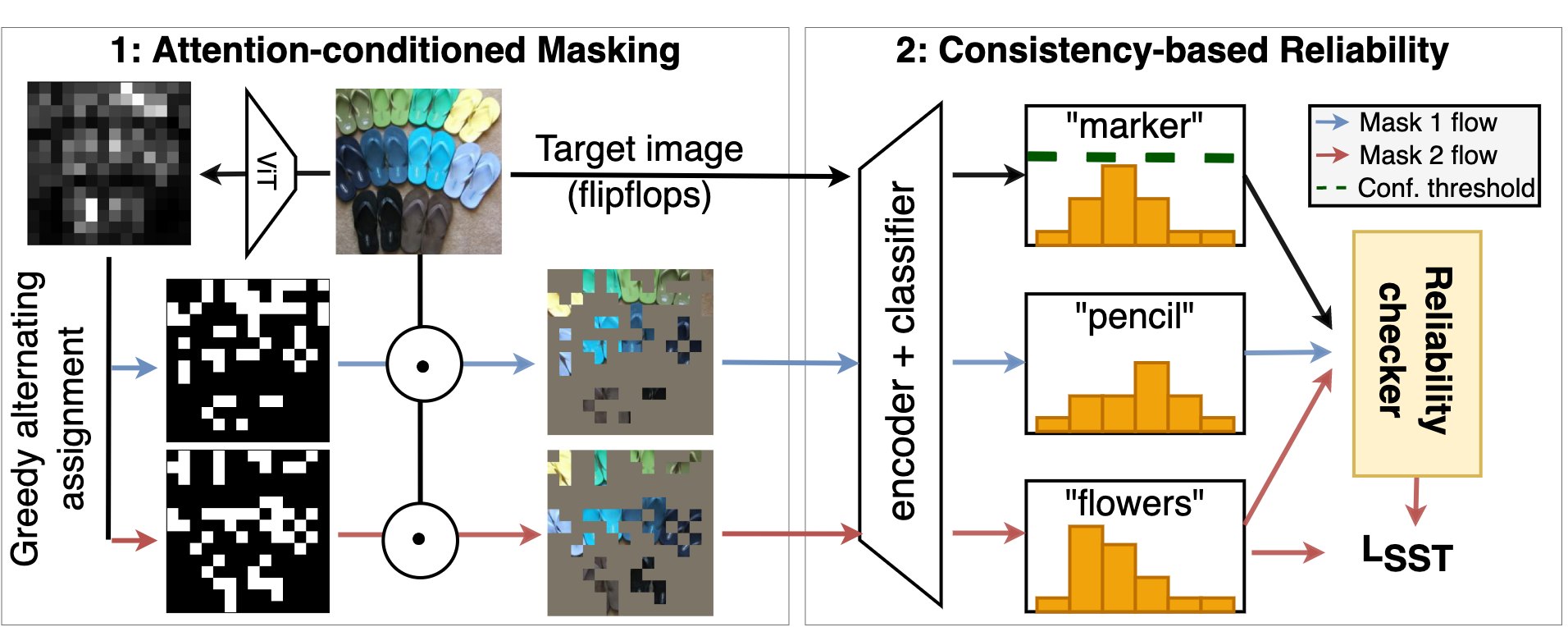

Viraj Prabhu, Sriram Yenamandra, Prithvijit Chattopadhyay, Judy Hoffman NeurIPS 2023 arXiv / code |

|

Sriram Yenamandra*, Arun Ramachandran*, Karmesh Yadav*, Austin Wang, Mukul Khanna, Theophile Gervet, Tsung-Yen Yang, Vidhi Jain, Alexander William Clegg, John Turner, Zsolt Kira, Manolis Savva, Angel Chang, Devendra Singh Chaplot, Dhruv Batra, Roozbeh Mottaghi, Yonatan Bisk, Chris Paxton CoRL 2023 arXiv / code |

|

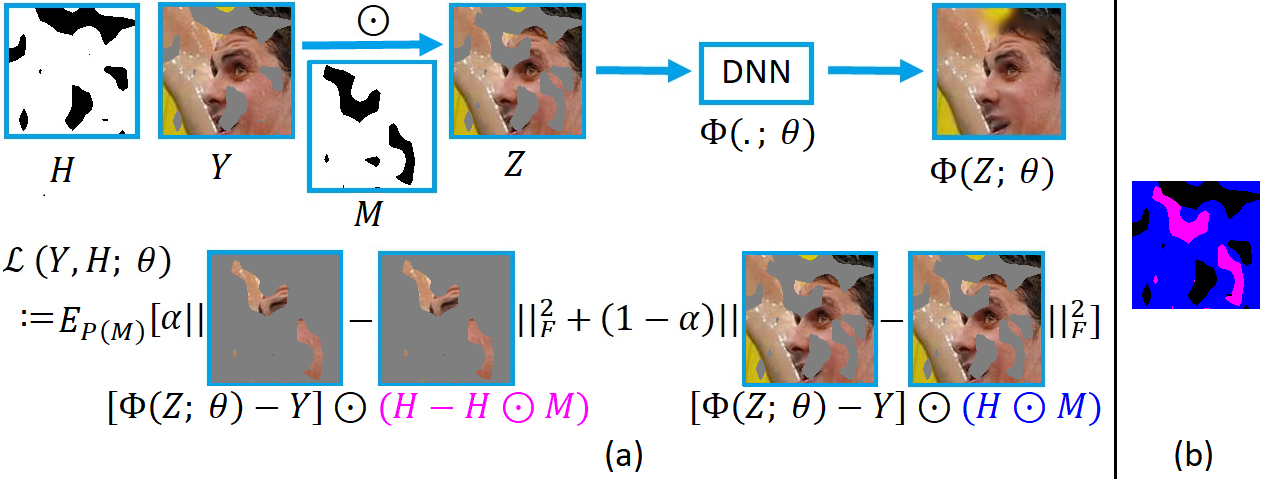

Sriram Yenamandra, Pratik Ramesh, Viraj Prabhu, Judy Hoffman ICCV 2023 arXiv / code |

|

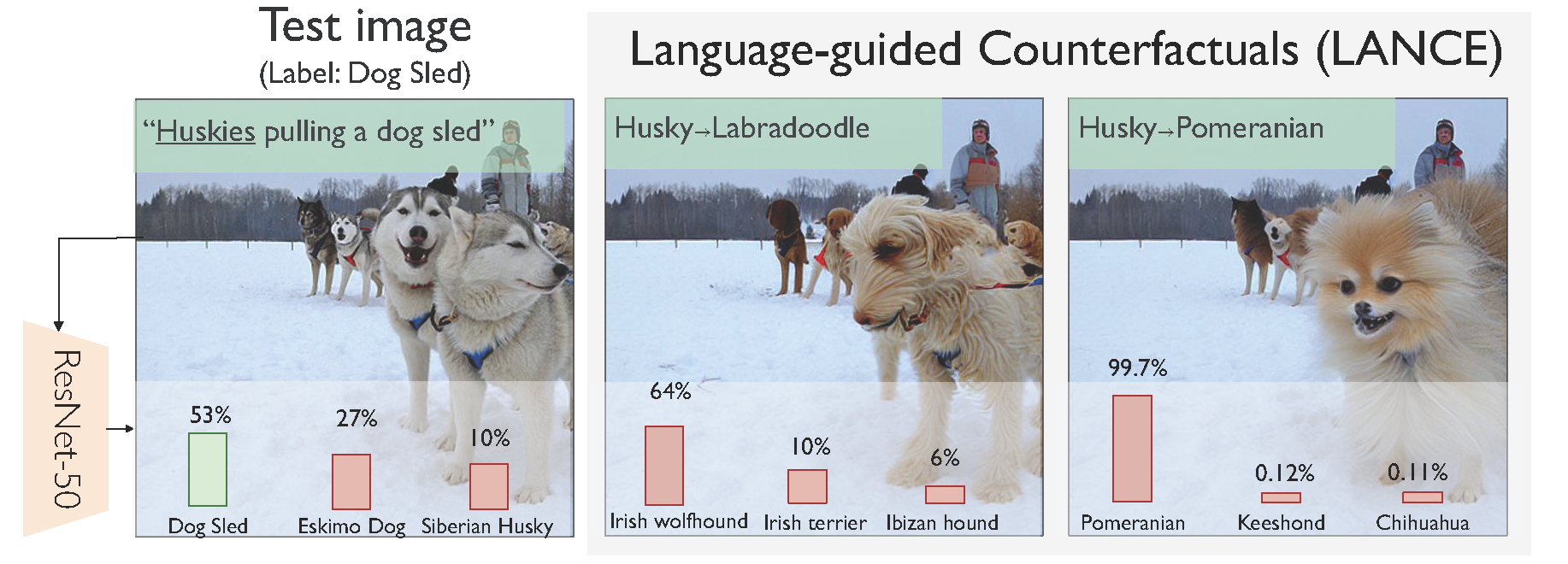

Viraj Prabhu*, Sriram Yenamandra*, Aaditya Singh, Judy Hoffman NeurIPS, 2022 arXiv / code |

|

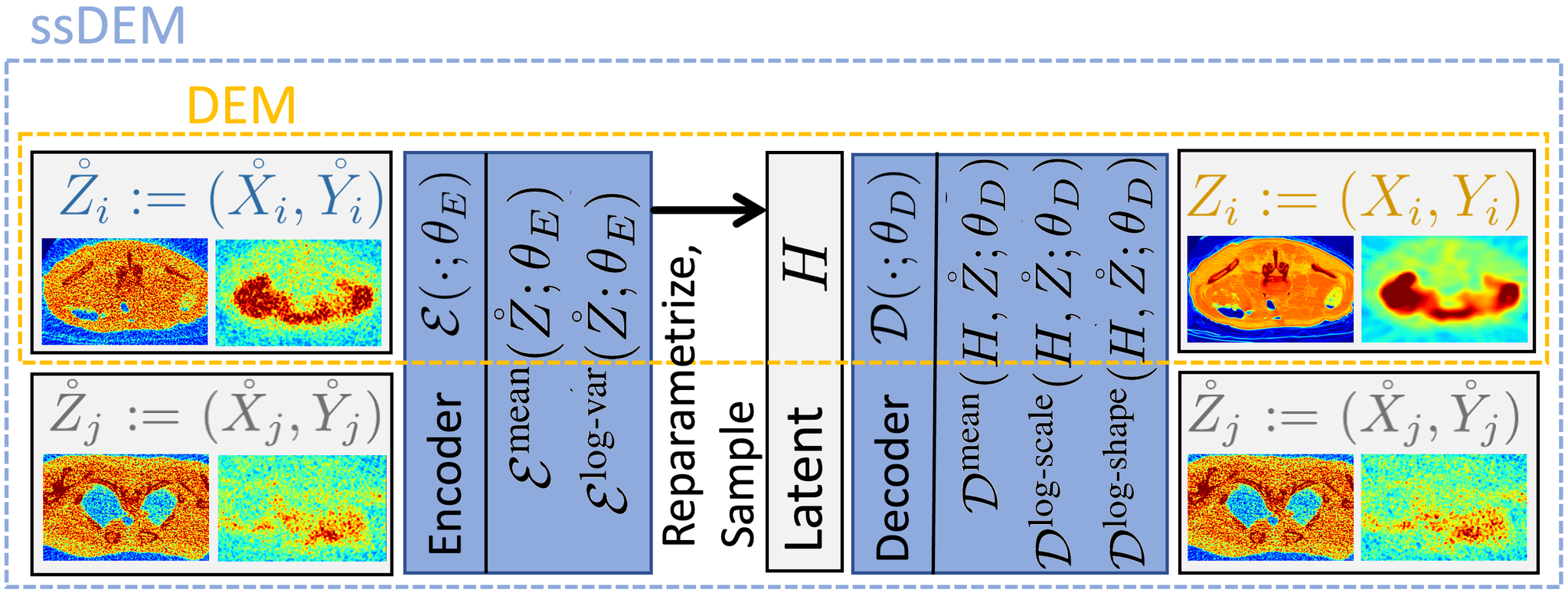

Yash Kant, Arun Ramachandran, Sriram Yenamandra, Igor Gilitschenski, Dhruv Batra, Andrew Szot*, and Harsh Agrawal* ECCV, 2022 project page / arXiv / code / colab |

|

Vatsala Sharma, Ansh Khurana, Sriram Yenamandra, Suyash P. Awate ISBI, 2022 (Best paper award) paper |

|

Sriram Yenamandra, Ansh Khurana, Rohit Jena, Suyash P. Awate ICPR, 2020 paper |

|

(Design and CSS courtesy: Jon Barron) |